AWARDEES: Mahzarin Banaji, Anthony Greenwald, Brian Nosek

SCIENCE: Implicit Bias

FEDERAL FUNDING AGENCIES: National Science Foundation, National Institutes of Health

Mahzarin Banaji

With speed and precision, your eyes dart across the screen as your mind makes connections and associations between words. In a short time, a computer program will reveal what your mind is not aware of: implicit associations you carry about gender, race, ethnicity, nationality, sexuality, and more. You are on the Project Implicit website, and you have just joined millions of others who have used this site to take an Implicit Association Test (IAT). These tests ask you to categorize pictures and words to measure speed and accuracy as you try to associate attributes like “good” and “bad” with social groups. Tests that use speed and accuracy to measure the mind have been around in psychology for a century, but the IAT showed something new: the resistance in our minds to equally associate the concept of Black American and White American with attributes that signify good and bad. Or the resistance we show when trying to associate the categories “elderly” and “young,” with attributes of “good” and “bad.” The research on implicit bias suggests that we are all carriers of cultural beliefs and attitudes in our heads, whether we know it or not, and whether we like it or not. The implications are profound.

Her colleagues say that Mahzarin Banaji is direct, thoughtful and driven. When you speak with her, she listens. And as she listens, she connects. The connections that she makes and the questions that she asks are purposeful, and when she starts asking questions, people pay attention. Born and raised in India and educated at the Ohio State University, Mahzarin joined the psychology faculty at Yale University in 1986. At Ohio State, she connected with her future mentor, Anthony Greenwald. At Yale, Brian Nosek would join her lab in 1996 as a student. These connections would shape an idea that has revolutionized experimental psychology and seen impacts in business, law, education, the military, and healthcare. And it began, as basic research does, not with seeking a solution, but with asking the question “Why?” Why is it that humans report having little or no bias, and certainly no animosity toward social groups, and yet the data from everyday behavior indicate disparities in access, opportunity, and treatment?

Anthony Greenwald

Mahzarin met Anthony on her first day in the United States, and he served as her Ph.D. advisor at Ohio State. More than 30 years later they would write the bestselling book, “Blindspot: Hidden Biases of Good People,” that would share the idea of implicit bias with the broader public in 2013. At the time Mahzarin arrived at Ohio State, experimental psychology was an evolving field, as summarized in the recent paper “The Implicit Revolution” in American Psychologist. Motivating her research was the discovery that people with amnesia who could not recollect information in the usual way when asked “tell me what you had for breakfast this morning,” nevertheless showed “savings” in memory. This idea, that someone may not be able to recollect and still have that information in their mind and even use it, provoked the first experiments that Mahzarin conducted at Yale University. She modeled the studies after the work of Larry Jacoby to show that although men’s and women’s names had equal status in memory, a bias emerged: people were far less likely to judge women’s names as famous compared to men’s names. But why? When asked, not a single participant observed that there was a possibility that the gender of the names was a factor. And yet gender bias in the results was clear.

Mahzarin and Anthony suggested that perhaps the bias wasn’t experienced by the people who showed it because it was not consciously accessible to them. And if that was true, how pervasive is such access to “implicit” information about social groups? This needed to be studied further, so the collaborators applied for and received funding from the National Science Foundation to further their exploration into the human mind. There were no existing terms to refer to this phenomenon, so Mahzarin asked an admired colleague, Robert Crowder, if adapting a term used by memory scientists (“implicit memory”) would be a good idea; he responded that the term “implicit attitudes” (the title of the first NSF grant), was indeed appropriate.

Brian Nosek

At Yale, two of Mahzarin’s students, Curtis Hardin and Alex Rothman, worked with her on studies that found that alerting people to the same concept, such as “assertive,” didn’t translate into equal assessments of men and women. They titled their paper “Implicit Stereotyping,” and it was the first paper to use the term “implicit” with the meaning it has now acquired. Anthony and Mahzarin wrote a paper, now highly cited in the academic literature, titled “Implicit Social Cognition: Attitudes, Stereotypes, and Self-Esteem,” which explained this new terminology and first used the term “implicit bias.” That research paper concluded with a remark that methods to study implicit cognition should be a priority, and Anthony subsequently conducted the first study using the Implicit Association Test.

Over the next decade, many students in their labs conducted experiments to identify the nature and boundary conditions of the IAT. The early data were met with surprise and even resistance by others in the scientific community – how could scientists, the very people who studied prejudice, show evidence of implicit bias themselves? Mahzarin and Anthony were clear that implicit bias was not the same thing as prejudice, which is a conscious feeling of antipathy. What they saw in the data were the roots of bias. Among the questions they encountered was the idea that whatever the IAT was measuring, it wasn’t an attitude or preference in the way that social psychologists described them. Elizabeth Phelps, a neuroscientist and colleague of Mahzarin, suggested pairing the IAT race test with fMRI (functional magnetic resonance imaging), i.e., view the brain’s response to black and white faces. If the IAT detected something akin to an attitude, a person’s response on the test should be related to greater activation in a region of the brain called the amygdala, which was known to be involved in emotional learning and fear conditioning. Indeed, the results showed such a correlation – those with higher IAT race bias also showed higher levels of amygdala responding.

Brian Nosek came to Yale to pursue a graduate degree after a late switch to psychology and women’s studies from his undergraduate work in computer science. He was pursuing an interest in better understanding the human mind and applied to work with Mahzarin, a leading expert. After weeks of not hearing back, he sent her an email to inquire. Mahzarin did not recognize Brian’s name from her packet of applicants, but she was interested in his background in computer science. She tracked down his application and took a chance on this student following his passion. Perhaps she saw in him what so many see in her, the drive that makes for an excellent scientist. The serendipity of that email and the choice she made in bringing on a student with a computer science background would begin to write a bold new future, not just for research on implicit bias but for its broader dissemination on the internet. Brian now heads the Center for Open Science, a project he attributes to his own early experiences in doing research on implicit cognition and seeing the power of collective action.

Through continued support from NSF as well as the National Institutes of Health, researchers continued to strengthen the methodology and build data sets. And the more work they did, the louder a few critics became. What was most frustrating from the criticism is that it wasn’t always the science itself under attack. After all, the psychological science community readily accepted results that showed an implicit preference for other concepts like “flower.” That type of result was treated as legitimate because it fit with our conscious belief that flowers are beautiful and good. But when the method showed that female and leader could not be associated as quickly as male and leader, or if dark skin was more negative than light skin or if White Americans were viewed as more American than equally American citizens who were Asian American, suddenly that result was questionable, even considered bunk.

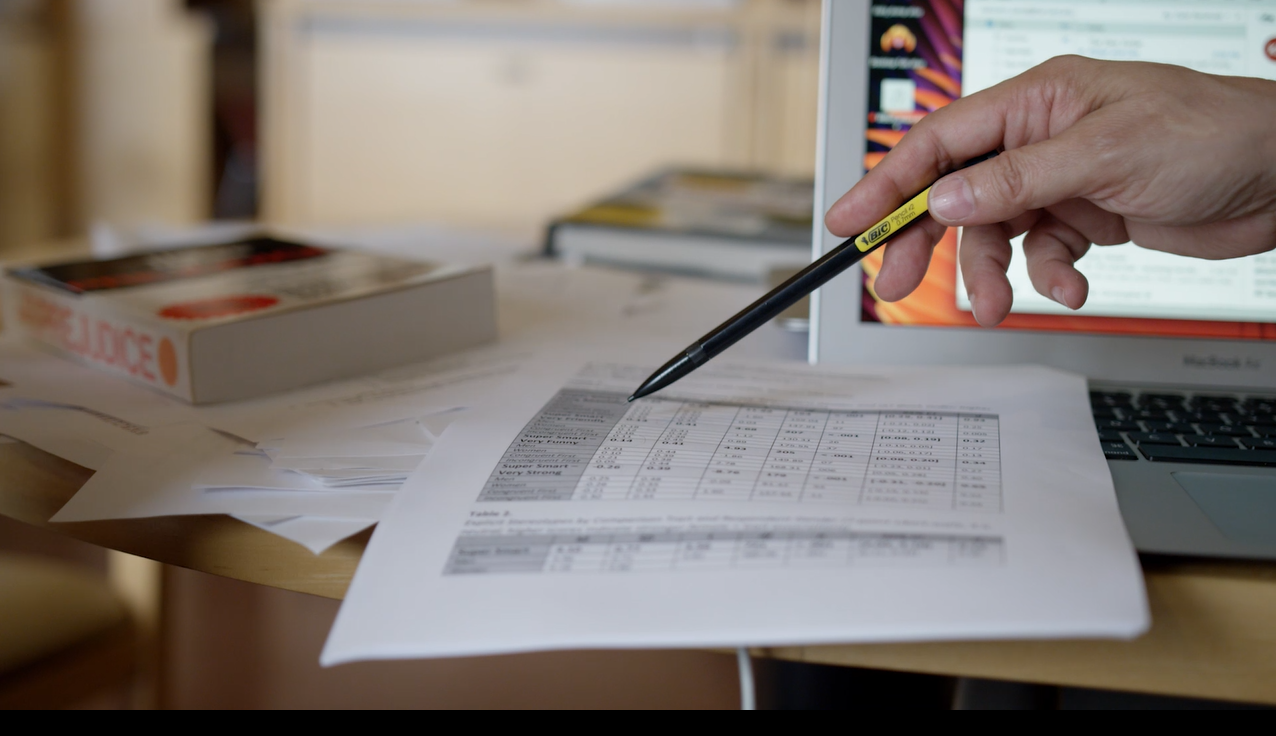

“We could build a website so that more people could try out the tests,” Brian suggested. “They are so interesting to do and will get people involved in basic research on bias.” The proposal intrigued Mahzarin and Anthony, so Brian got to work constructing it. He barely slept for six weeks and called on favors from many technology friends to get it done.

When the website went live in 1998, the team began seeing thousands of users a day taking the tests and flooding them with data. Within the first month, they had received 50,000 completed tests. The team knew that they had struck a chord, perhaps even a nerve. People cared about the tests results. They were challenged. They argued. They listened. If you go to the Project Implicit site today, you can access bias tests on gender, sexuality, race, religion, and many more. The more tests that went up, the more interest the work received. Slowly, the term “implicit bias” caught on. Today it isn’t just a term used by the scientific community but has become a part of our cultural lexicon. Political candidates debate it. Businesses use it to improve the quality of decision-making. Teachers use it to explore if they are teaching all students equally. Police departments are engaging with it to improve law enforcement practices. Legal scholars and practitioners are asking about implications for the law and creating unbiased courtrooms. Clinical psychologists use it to detect mental states and track whether treatments are effective. And doctors and healthcare providers use the test to ask if their bias may lead them, quite implicitly, to behave in ways that are opposed to their own values of equal treatment.

The simplicity of the implicit bias test – associating concepts and attributes – was the key to its success. People could discover, with a series of clicks, whether they possessed implicit bias that might not be consistent with their conscious values and could impact their decision-making processes. Once you know, you know. And knowledge can be a powerful motivator for change.